Blog

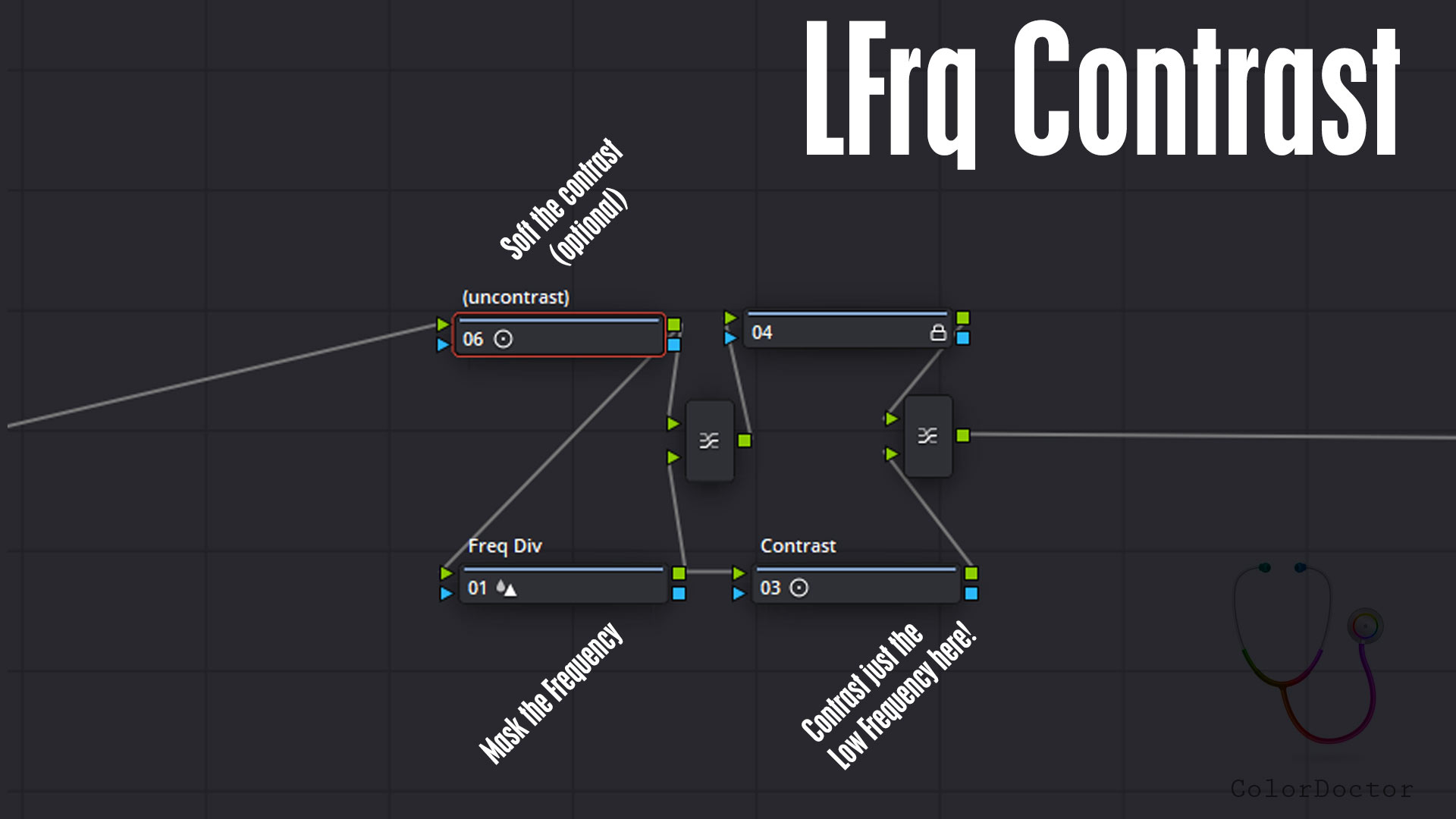

This nodal is useful to be able to boost the contrast only in the low spatial frequencies.

In this way, you can up the contrast without crisping the details of textures, located at a higher spatial frequency.

(uncontrast)

This node can optionally be used to reduce the contrast slightly before performing the contrast at low frequencies. By default it is unedited.

Freq Div

This node has the parameter Blur> Radius edited, with split the low frequency where the contrast will be processed separately. The higher the value, the larger the frequency to be masked.

Contrast

In this node, the Contrast must be increased, which will affect only the lower frequencies. Note that in this way the contrast will increase without tweaking the detail or textures that are not included in the mask defined in the previous node. Additionally, you can experiment with Pivot and also with MD (midtone detail) to edit details of the textures without modifying general contrast or high frequency detail.

Link to the .drx here

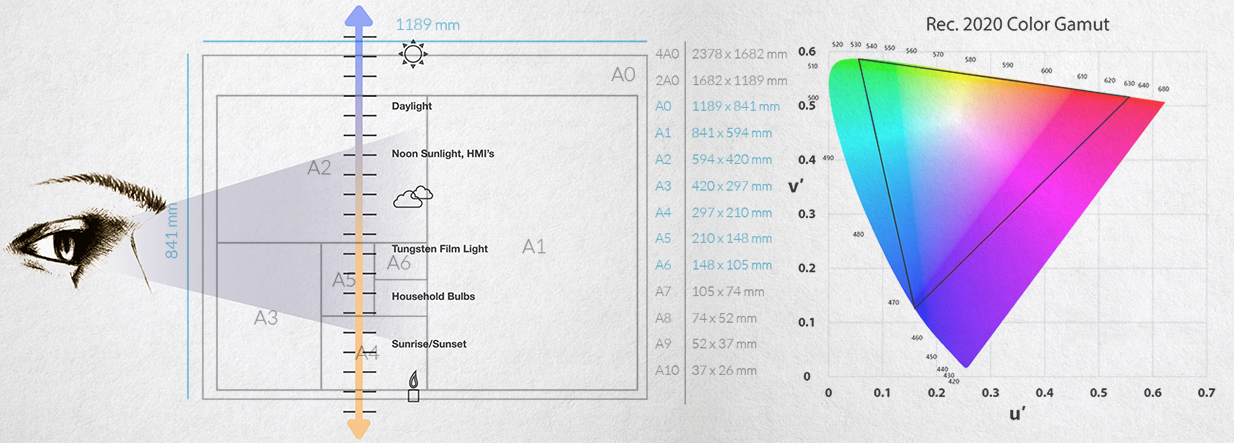

The pixel by itself is dimensionless. It does not have an absolute measure, but relative. And this count as much for the definition as for the color. However the first characteristic is easy to interpret, but the second is not so much. Sometimes it is convenient to bring correct reasonings that explain an already comprehensible phenomenon to understand another more abstract concept better. As the digital image is constructed by pixels, these they will be transformed into visible points. But knowing the number of pixels in a file does not tell us anything about the actual size to which we can represent the image. Since the pixel is a relative measure, on the other hand, a Metre is an absolute measure. A sheet, for example, can not be measured in pixels, but it can be estimated concerning the metre bar, which is a real object. The pixel is virtual, and it does not have length. Defining then the PPI (pixel per inch) the relation between actual length and virtual points is established, to be able to print the pixel with a particular size. The PPI expresses the number of pixels that enter an inch, so it defines the length of the file according to the number of pixels in the file. It is then necessary to establish what color these points will be, which should be expressed in the pixel under a mathematical model.

The pixel does not express by itself a color.

It goes to be a color thanks to a physical device that transforms it into reality, such as the printer, the projector or the monitor. And therefore the device defines the final color expressible by the pixel. The numbers that express the value of the pixel can be written under different mathematical languages, which describe the color model. For example YUV, RGB, Lab or XYZ are models, which can express precisely the same color with different triplets of numbers. It's like mentioning the color Red in different languages: Rouge, Rojo, Vermelho.

Red does not yet mean a very specific color.

To specify what is "red", a device that represents it will be necessary. For example a projector, a screen, a sheet with printed color through inks. According to the color rendering technology, different reds can be achieved, since in general the devices create their color palettes through the combination of just three primaries. So the purest colors will always be these three colors without any mixture. Then, under a RGB model, 100% of a primary with 0% of the other two would generate an extreme limit in terms of chromatic purity. But even the pixel is virtual, a numerical triplet that does not mean any specific color, but simply a "maximum" of something that we don’t know yet. These three extreme values of the pure RGB primaries will be measurable when the reproduction device transforms these virtual values into real. There they will be defined in absolute terms, thus defining the native Gamut of the device. In order to make reproducible colors compatible between different color device technologies, standards have been agreed, which are respected to define Gamuts according to the HD 709, UHD 2020 television recommendations, or for film screenings the DCI P3. All device manufacturers should then reproduce the colors of the standard and limit the reproduction of out-of-standard colors that the device can generate, and its correct reproduction in all intermediate tones achieved with the mixture of these three primaries.

This process is called calibration.

However, these TV standards did not define a specific dynamic range, but only the aspect of chromatic purity of the primary colors, and nothing about the brightness levels for black and white, or in other words, did not standardize the dynamic range. An image file in its spatial dimensions can be printed on A4 sheets or larger ones such as A3, A2 etc., but this would not improve the sharpness of the image. Simply the pixels are printed larger, occupying more space on the sheet, which does not generate any benefit in terms of definition. We should have files with more pixels to print them in larger size to don’t compromise definition. If the width of the page were chromatic purity, the height of the page would then be the dynamic range. A wide gamut and high dynamic range image could then be "printed" on a large wide sheet and high. Regarding the gamut and dynamic range, so that this pixel is not visible as a unit, we would need more bits per pixel, instead of number of pixels per file. If the pitch change of a pixel becomes "distinguishable" by changing a single RGB value, we do not have enough pixel depth, measured in bits.

So wide gamut and high dynamic range images need files with many bits.

The same way ss we needed files with many pixels to print large sheets without making visible these printed pixels. The HDR TV is defined under Rec.2100, which has the large gamut inherited from 2020, with UHD (almost 4K), and requires a greater depth between 10 and 12 bits according to the variant HLG, HDR10 or DoVi.

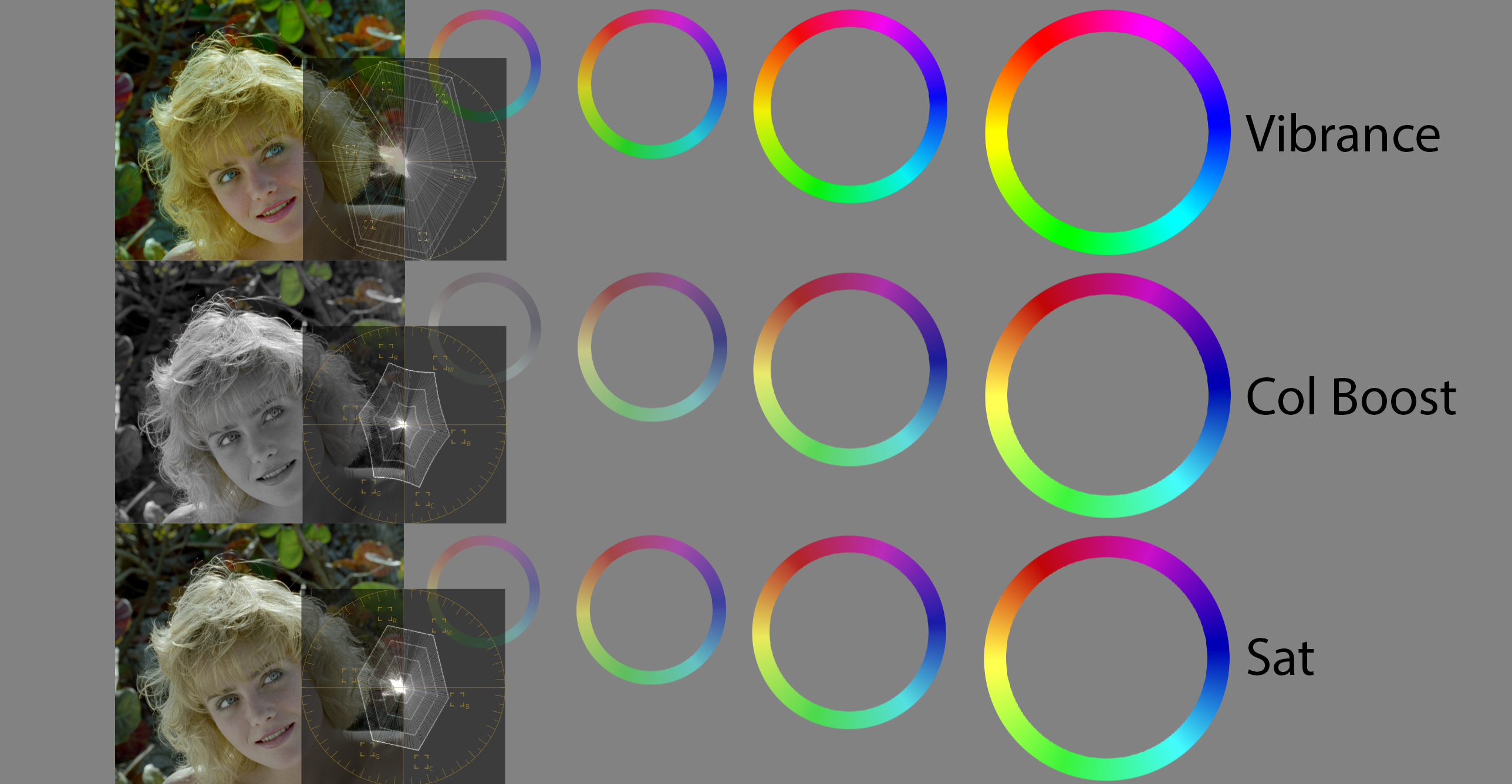

In an image which pixels saved as RGB values, “saturation” as itself do not exist. To be enabled to modify that, we must transform first to an HSL value, for example.

Once on this color model, the S channel is editable by simple math function. To scale saturation, make multiplication of the S channel pixel values.

Gain applies by multiplying in the RGB model. This rare name is a legacy of old analogical devices, where Gain controls the signal with the intent of scaling that. So to control the saturation usually a multiply function is used. But is not the only one usable in S.

Like in RGB a Lift is used (invert-multiply-invert), also exist the option to do this function to the S channel.

This parameter is called Vibrance.

Many colorists are confused with this parameter because Vibrance modifies too the luminance of colors. This change happens because Saturation is calculated in HSL space and not in a chromaticity-based model like YUV or Lab. The way to estimates saturation inside HSL is about an inexact criterion, where one color is considered full saturated while one of his RGB channels is zero.

Another function over the S channel is Add. This one does the same that Offset applies in RGB. But over S, the results are very different.

DaVinci Resolve calls this parameter as Color Boost. But it is not equivalent to Vibrance!

The name of the parameter Col Boost brings to colorists a false meaning (also the user manual describes wrong) about this control considering that similar to Vibrance, but it is wrong, since really this parameter do an offset over the saturation of all values, by clipping in 0 and 100 values, like happens with Offset in RGB.